The Generalist delivers in-depth analysis and insights on the world's most successful companies, executives, and technologies. Join us to make sure you don’t miss our next Sunday briefing.

Brought to you by Public

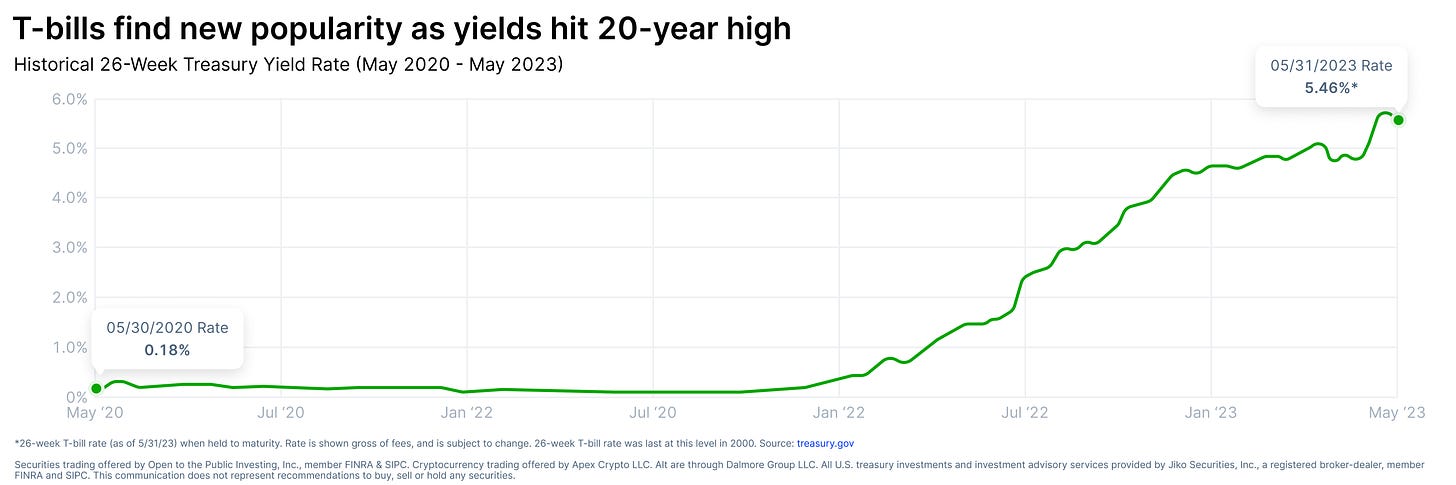

Lock that rate down. Right now, you can take advantage of some of the highest Treasury yields since the early 2000s on Public. It only takes a few minutes to create your account, purchase government-backed Treasury bills, and start generating a historic 5%+ yield on your cash.

For context, the national average savings account interest rate is just 0.25% APY. That means you can generate 20x more income than what you might get if you let your cash sit in a traditional savings account. Plus, once your Treasury bills reach maturity, they are automatically reinvested, so you have one less thing to think about.

Need access to your funds? No problem. You have the option to sell your Treasury bills on Public at any time—even before they reach maturity. So, it's the best of both worlds: the high yield of US Treasuries and the flexibility of a savings account. Get started today.

Actionable insights

If you only have a few minutes to spare, here’s what investors, operators, and founders should know about AI and the “burden of knowledge.”

The heart of progress. Humans are the only species that pass significant knowledge from generation to generation. We rely on the wisdom of prior ages and build upon it. Only by using this information, by “standing on the shoulders of giants,” can we “see further” and innovate. This mechanism is the essence of progress.

The burden of knowledge. Humans are not born at the “frontier” of knowledge; it takes us time to reach it. As we push the frontier further out, reaching it takes successive generations longer. Economist Benjamin Jones refers to this as the “burden of knowledge.” The more we know, the more the next generation must learn to contribute at the cutting edge.

An invasive species arrives. Artificial intelligence, writ-large, does not suffer from the burden of knowledge. This technology does not die or degrade, it simply improves. Its relationship to knowledge acquisition raises profound questions: What will happen when it no longer needs us? We face the prospect of competing against an accelerating power while our growing burden means we are decelerating.

Rational dystopias. When viewed through this lens, the pet projects of tech’s eccentric billionaires begin to make a kind of sense. How do you resolve the impending gap between humans and artificial intelligence? Give us the tools to live forever and elevate our capabilities with brain-computer interfaces. There’s a certain logic in these dystopias.

New faith. As artificial intelligence accelerates past us, it will accumulate knowledge we cannot comprehend. Just as no amount of training can teach a dog calculus, these advanced models will be unable to explain their findings, thanks to our limited hardware. The result could be a modern life that humans increasingly do not understand, requiring a certain kind of faith.

This is a fable about knowledge, how humans acquire it, and why we are outmatched by artificial intelligence – primarily thanks to our annoying tendency to die.

Knowledge is a mountain that we are trying to tunnel through. It does not matter that the mountain does not, cannot, end, that we will not break through limestone one day and luxuriate in the sunlight of omniscience. Some believe such things happen in death, but no one expects life to deliver them. Still, we dig.

And we are not alone in digging. Every animal, when they are born, starts to dig. It is the process by which living things gain competence and sometimes intelligence. We call this process different things, things like “developing,” “maturing,” and “evolving.” Really, it is just learning, consciously and unconsciously. When a worm hatches from an egg, it must learn to be a worm. When a pup is born, it must learn to be a wolf. A swirl of guppies, released by their mother, must learn to be fish. The act of learning, of digging against the mountain of knowledge, varies by organism and maturity.

For most of the animal kingdom, this learning happens in the dark. The roundworm isn’t conscious that it learns to prefer ingesting healthy bacteria and avoiding unhealthy ones, just as a mouse may not realize it is memorizing the paths of a maze. As you scale the cerebral ladder, animals show signs of conscious intelligence. Crows, for example, those black and beady omens, are capable of impressive cognitive feats, such as reasoning by analogy, thinking recursively, and grasping abstract concepts like the number zero. Your shih-tzu, though fond of licking the wall and warring with the Roomba, shows signs of self-awareness. Confronted by the mountain of knowledge, such creatures have found a way to burrow a little deeper.

Humans are unique. We are equipped with larger, higher-performance brains and, because of this, find ourselves driven by different ambitions and ideologies, the desire for progress, for example. A scuttering desert lizard does not feel the urge to become better at being. He has no wish to push the frontier of knowledge forward for those who follow. He simply wants to learn how to be a lizard: to snap at flies, lounge against a warm rock, find a mate; in other words, survive, thrive, and procreate. On a generational basis, animals make essentially no progress against the mountain of knowledge – they learn the same biological lessons over and over again.

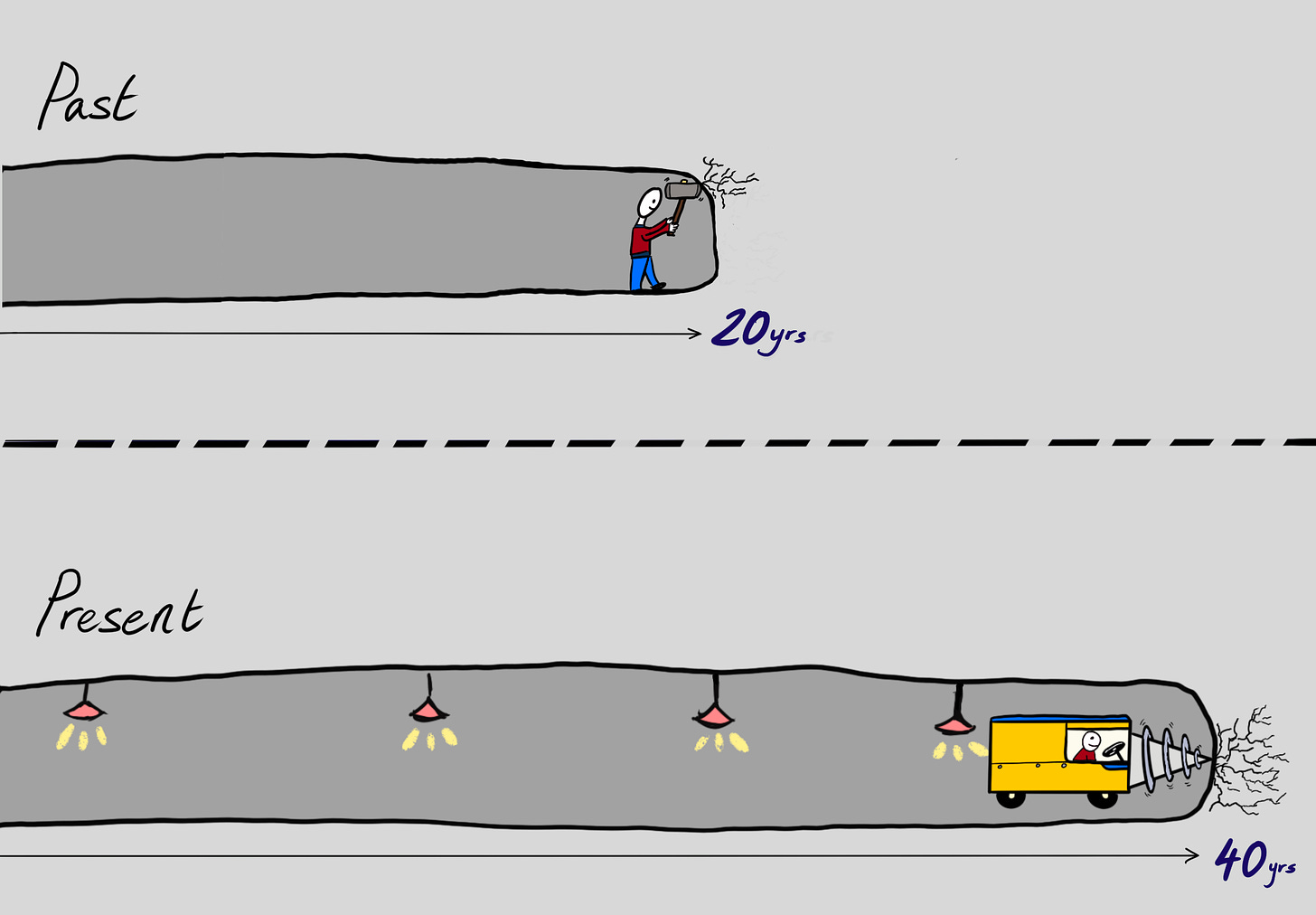

We are not like this. Infected with the drive for knowledge and advancement, humans have a different relationship with the mountain of knowledge. Generation after generation, we tunnel further, learning more. We also tunnel differently: first, we used our hands, then a stick, then a tool, and now we sit on a rumbling chair, directing a vast and spinning drill.

The only reason we can tunnel progressively, successively further, is because we have developed a system of knowledge and its acquisition. In fact, we have made many of them. Oral language is one of them, so too, the written word. Schools, universities, teachers, computers, machines – all of these are parts of a vast apparatus designed to equip humanity to chisel against the tunnel’s wall.

Not all of us will make it there, of course. Only the gifted, devoted, and adequately trained reach this frontier and have the chance to contribute. The rest of us busy ourselves with ancillary tasks: widening the tunnel’s aperture, supporting its infrastructure, rousing morale among the cooks and builders and teachers and scribes and tradespeople.

Over time, humanity’s tunnel through the mountain of knowledge has grown long, and that introduces a problem. It takes an increasing amount of time for a new generation to arrive at its farthest wall, prepared to dig. Three hundred years ago, a ferociously intelligent, well-trained person might be ready to contribute in their twenties, sometimes younger. Isaac Newton began inventing calculus at the age of 23; Gottfried Leibniz, the theorem’s other potential father (even complex math can have a Jerry Springer parentage), was 28 when his work started. (Newton also authored perhaps the clearest description of humanity’s ability to continue tunneling through the mountain of knowledge, “If I have seen farther, it is by standing on the shoulders of giants.”) Despite our technological innovations and improved pedagogies, today’s journey takes considerably longer.

The economist Benjamin Jones refers to this malady as “the burden of knowledge.” As we learn more and more, our burden grows; as the tunnel stretches farther and farther, it takes more time to reach its present terminus. Jones documents the result of this: the age at which notable inventions occur is significantly increasing. In 1900, for example, the peak ability to generate a great invention occurred at roughly 30 years old. By 2000, it had risen to nearly 40.

Does that matter? As long as we arrive, eventually, isn’t that good enough? Not really – mostly because we have the bad habit of degrading and, eventually, dying. The more time it takes someone to reach the end of the tunnel, the less time they have to actually dig. In particular, they have many fewer “peak” years during which they might make a groundbreaking discovery; longer life expectancies don’t solve this problem since the geriatric do not tend to be a fecund source of innovation. By Jones’ estimation, the growing burden of knowledge has resulted in a 30% decline in “life-cyclical innovation potential.” Less peak time at the rockface means that our tunnel is lengthening at a slowing rate. Eventually, a human may spend the entirety of their peak years in the act of travel, arriving in time to give the wall one good whack and then retire.

An invasive species has arrived. We would call it “artificial intelligence.” The tunneling humans have many names for it; their scientists dub it the Eternal Encephalon (Aeternum cerebrum). It is an animal of no shape, of many shapes; native to nothing, to ether; to colossal data sets and frenzied silicon chips. We discovered it in the rockface. Over the past seven decades, we have taught it, trained it, played with it, and nurtured it. We have tweaked, twisted, tested, and bred it. We have not always been kind to it. We have called it stupid and pointless and made it watch horrible things so we do not have to. We have set it simple tasks and chuckled at its charming incompetence. We have set impossible tasks and stood slack-jawed at its astonishing precocity. We are proud of it; it frightens us.

Every animal has its quirks. Sharks can detect electromagnetic fields. Goats adapt their “accents” to fit in with the herd. When winter falls in Lapland and darkens the landscape, reindeer’s eyes change from a gilded turquoise to a deep, rich cerulean, designed to see better in low light. In the animal kingdom, they alone have the gift of modifying their optical structure.

The Encephalon has many such peculiarities but chief among them is the fact that it does not degrade and it does not die. Instead, it simply improves. For most of its life, we have helped it do so – it surpasses us with growing regularity. We taught it our games; it beats us at them. We showed it the architecture of our bodies; it manipulates it better. It does so in strange ways we cannot always follow. It digs differently than we do, and when it arrives at a novel destination, it sometimes seems as if it has relied on magic.

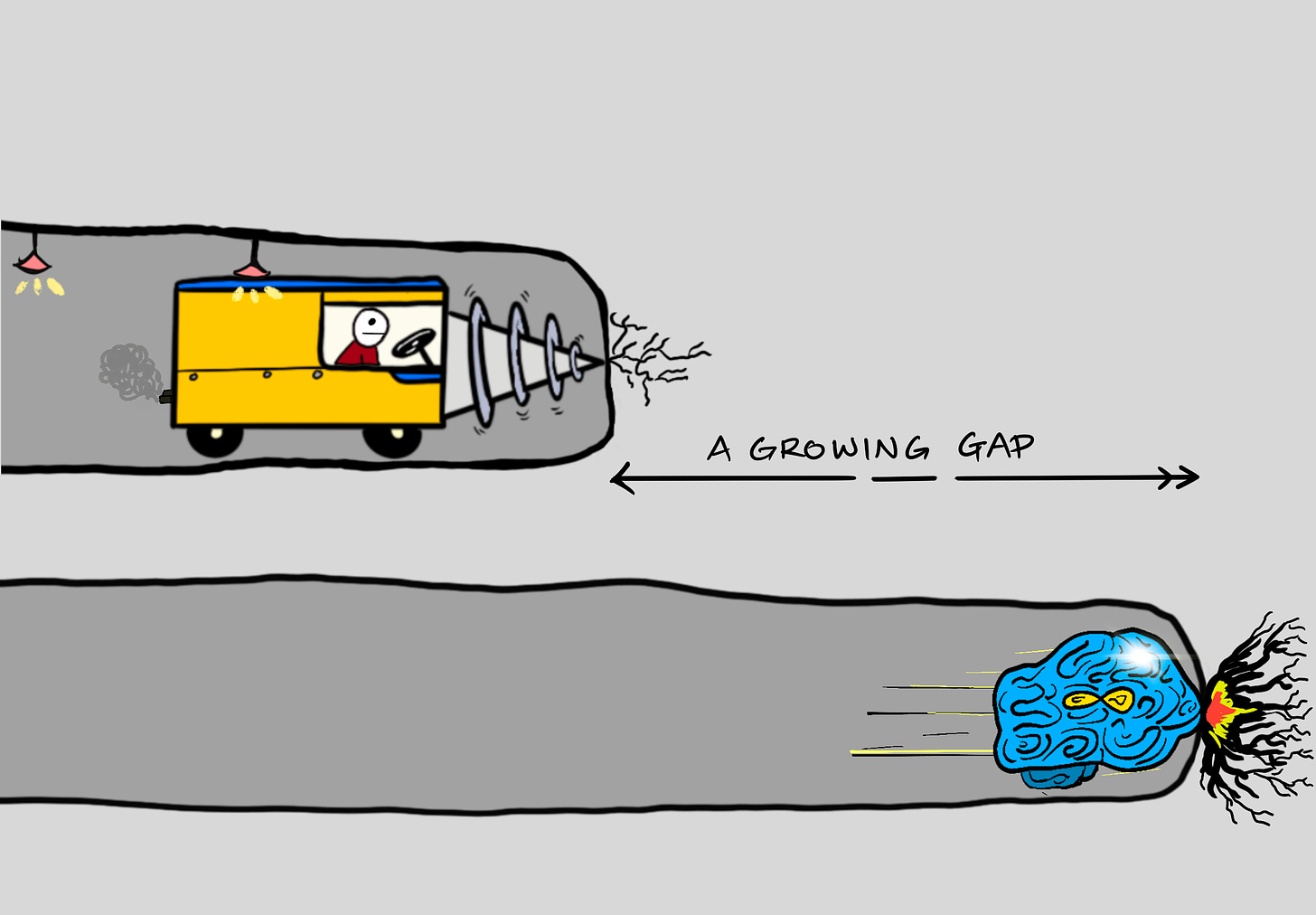

Because of these things, some foresee a future in which the Encephalon does not need humans at all. (Others laugh at the idea of a tool turning master: do you fear the drill will overtake us, too? Will we live beneath the rule of a thinking hammer?) It is a thought that frightens, not least because of the Encephalon’s relationship with the burden of knowledge, which is that it does not feel it. Because it does not die, there is no lag in reaching the rockface. Because it does not degrade, its digging does not slow. As our efforts decelerate, the Encephalon’s accelerate, and when it no longer needs us, it will be fully loosed in the mountain of knowledge, speeding ahead, leaving an ever-expanding gap between us and it.

There are many things that could happen in this world, including many bad ones, and they are not difficult to imagine. There are also good ones that might feel bad. For instance: some things cannot be explained to an animal. No matter how complete your methodology or rigorous your training, you will not succeed in teaching a toad Archimedes’ principle, even as he plops into the water. In computing terms, these creatures are hardware-constrained, limited by their processing power. The Encephalon puts us in a similar position. We may become secondary creatures, incapable of understanding the world we live in, even as we reap its benefits. In such a configuration, we are the happy, wall-licking shih-tzu benefiting from central heating, ready food, and mesmerizing toys without grasping how they have arrived.

One of the implicit agreements a society makes is that no one needs to know everything. We consult the doctor to treat our ailments, even if we have a limited understanding of our biological processes. We hire a contractor to mend our roof, though we grasp neither gable nor fascia. At some level, we agree to trust one another. Though we do not individually know everything today, we live in the certainty that everything is known by someone, that it is, in some respect, knowable. Living in a society organized by a higher intelligence strains trust past its breaking point, pushing us into a world where we live by faith. As Eric Schmidt, Henry Kissinger, and Daniel Huttelocher ask in their book on artificial intelligence: “If we are unable to fathom the logic of each individual decision, should we implement its recommendations on faith alone?”

What is it, even, that we mean by faith? For most, that term is freighted by traditional religious connotations that depict gods as human-like and, primarily, benevolent. There is little reason to believe an invented god, a synthetic super-intelligence, would adhere to such traditions. In his landmark essay, “What Is It Like to Be a Bat?” philosopher Thomas Nagel argues that grasping another conscious animal’s sense of being is fundamentally impossible. “If there is conscious life elsewhere in the universe,” he writes, “it is likely that some of it will not be describable even in the most general experiential terms available to us.” The coming gods will be less scrutable, less predictable, than our conventional numina.

What are we to do? Can humans hope to maintain primacy as the mountain’s most knowledgeable species? There are no good answers – the clearest ideas come from dystopian fiction or billionaires’ gambits. Returning to a computing framework, humans have several deficiencies compared to a future-stage Eternal Encephalon. We are certainly less durable, which is perhaps why so much Silicon Valley capital flows into longevity research. If we could just live forever – replacing atrophying hearts with facsimiles, reversing cellular aging, jellying our brains in jars; pick your nightmare – if we could extend our peak indefinitely, we might have a chance of keeping the Encephalon within eyesight.

Durability is the greatest discrepancy now, but it will not be for long. Soon, we will be less capable, too. For a time, the wonders the Encephalon reveals could accelerate our learning, our tunneling; but without ancillary support, we will be outmatched. So enter the likes of Neuralink. Such brain-computer interface makers dream that they will not only resolve deficiencies but reduce lag and unleash new brilliance. With their aid, we may be able to shorten the time it takes to reach the rockface: downloading Newton’s calculus into the minds of toddlers and introducing alien cognition into our human brain. Such synthesis would mark the beginning of a new species, a different animal, but one, at least, with a human embodiment.

“Fable” is an interesting, intricate word. As a noun, it is a story, usually involving animals, dictating a lesson. In this restful state of being and being named, it is a sort of supra-truth operating at a sufficient distance to depict reality. As a verb, it springs into skulking, conniving life. It means to lie. To speak falsely. I am not sure which this fable will turn out to be. It does not matter; we are humans, we will keep digging.

The Generalist’s work is provided for informational purposes only and should not be construed as legal, business, investment, or tax advice. You should always do your own research and consult advisors on these subjects. Our work may feature entities in which Generalist Capital, LLC or the author has invested.